Importing from CSV to SQLite in Go

- 6 minutes read - 1172 wordsThis is the eighth in a series of articles about writing a small reading list app in Go for personal use.

Sometimes there are operations that need to be done on the app that don’t need to be built into the frontend. Administrative process like backups, database migrations, or – the thing we’re going to do today – bulk data import.

I’ve got a CSV dump of my Goodreads books, and I want to import those into Aklatan’s db. I only need to do this once, so I don’t need to mess around with making a web form for it. It just needs a backend administrative command to perform the import as a one-off process.

By the end of this post we’ll have:

- a command that loads data from the Goodreads CSV export into akalatan.db

- knowledge of how to use raw SQL – we won’t use Gorm for this task

And we’ll do it in less than 75 lines of code.

The Input Data

The program we want to write is simple: it needs to read rows from a CSV file, extract the fields of interest, and insert rows into the sqlite database.

Here’s a sample of the data (apologies for the wide format):

Book Id,Title,Author,Author l-f,Additional Authors,ISBN,ISBN13,My Rating,Average Rating,Publisher,Binding,Number of Pages,Year Published,Original Publication Year,Date Read,Date Added,Bookshelves,Bookshelves with positions,Exclusive Shelf,My Review,Spoiler,Private Notes,Read Count,Recommended For,Recommended By,Owned Copies,Original Purchase Date,Original Purchase Location,Condition,Condition Description,BCID

400354,"Men at Arms (Discworld, #15; City Watch #2)",Terry Pratchett,"Pratchett, Terry",,"=""0061092193""","=""9780061092190""",0,4.37,HarperPrism,Mass Market Paperback,377,2003,1993,,2020/04/26,"to-read, wip-series, owned","to-read (#125), wip-series (#7), owned (#8)",to-read,,,,0,,,0,,,,,

6977769,"61 Hours (Jack Reacher, #14)",Lee Child,"Child, Lee",,"=""0385340583""","=""9780385340588""",0,4.11,Delacorte Press,Hardcover,383,2010,2010,,2021/08/05,"to-read, wip-series","to-read (#204), wip-series (#21)",to-read,,,,0,,,0,,,,,

30413199,Concurrency in Go: Tools and Techniques for Developers,Katherine Cox-Buday,"Cox-Buday, Katherine",,"=""1491941197""","=""9781491941195""",3,4.24,O'Reilly Media,Paperback,238,2017,,,2021/02/15,programming,programming (#42),read,,,,1,,,0,,,,,

16299,And Then There Were None,Agatha Christie,"Christie, Agatha",,"=""0312330871""","=""9780312330873""",4,4.27,St. Martin's Press,Paperback,264,2004,1939,,2021/01/16,,,read,,,,1,,,0,,,,,

Parsing CSV is annoying and filled with booby traps. Fortunately there’s a package in the standard library: encoding/csv.

A Note on Quality

This is a “quick & dirty” importer, representing maybe an hour’s worth of work (including some manual testing and reading the docs to remember how to use certain packages). If this was something I was going to use on an ongoing basis I’d pull code out of main() and add some unit tests.

But it’s straightforward enough that when I need to reuse it I’ll be able to understand the code and make any changes needed at that time. (And I will revisit this command after some upcoming enhancements to Aklatan that add fields to the database.)

The Code

Since this is going to be built as a standalone command, we’ll use Go’s conventional directory structure and put it in ./cmd/importer/importer.go.

Our Makefile has been ready for this all along. We just need to define CMDS at the top. A rule further below will automagically build the command and leave it in the project root directory.

PROJECT := aklatan

CMDS := importer

ALLGO := $(wildcard *.go */*.go cmd/*/*.go)

ALLHTML := $(wildcard templates/*/*.html)

In importer.go, add some imports:

package main

import (

"database/sql"

"encoding/csv"

"errors"

"flag"

"io"

"log"

"os"

_ "github.com/mattn/go-sqlite3"

)

Then let’s define some command line arguments to control the input and output files – this is quick & dirty, but we’re not barbarians who would hard code the filenames, right?

func main() {

var dbName string

var csvName string

flag.StringVar(&dbName, "db", "", "SQLite database to import to")

flag.StringVar(&csvName, "csv", "", "CSV file to import from")

flag.Parse()

if dbName == "" || csvName == "" {

flag.PrintDefaults()

return

}

Next, open the database. Note that sql.Open doesn’t actually check that the file exists or can be written – so we call Ping to make sure we’re connected. Also note that we don’t bother with much error handling. If anything goes wrong, we just abort. After verifying the database is open, create the books table if it doesn’t already exist. (This duplicates the logic in the main app, but it’s ok for this command for now. If this was a production-grade command we’d make sure we weren’t duplicating logic.)

db, err := sql.Open("sqlite3", dbName)

if err != nil {

log.Fatal(err)

}

defer db.Close()

err = db.Ping()

if err != nil {

log.Fatalf("ping failed: %s", err)

}

stmt, err := db.Prepare("create table if not exists books (id integer primary key autoincrement, title text, author text)")

if err != nil {

log.Fatalf("prepare failed: %s", err)

}

_, err = stmt.Exec()

if err != nil {

log.Fatalf("exec failed: %s", err)

}

Then, open the CSV file. The csv package supports a few options for reading files because CSV isn’t exactly a well-defined standard, but we don’t need anything special in this case.

f, err := os.Open(csvName)

if err != nil {

log.Fatalf("open failed: %s", err)

}

r := csv.NewReader(f)

// Read the header row.

_, err = r.Read()

if err != nil {

log.Fatalf("missing header row(?): %s", err)

}

And finally we’re ready to loop over the records in the CSV. We grab the title and author, and insert them into a database record.

for {

record, err := r.Read()

if errors.Is(err, io.EOF) {

break

}

title := record[1]

author := record[2]

stmt, err = db.Prepare("insert into books(title, author) values(?, ?)")

if err != nil {

log.Fatalf("insert prepare failed: %s", err)

}

_, err = stmt.Exec(title, author)

if err != nil {

log.Fatalf("insert failed(%s): %s", title, err)

}

}

} // end of main()

And that’s all there is to it! Run make, grab your export from Goodreads (or use the sample csv above, or even adapt this example to any other similar service – just adjust the field numbers in the loop above), and run:

% ./importer -csv goodreads_library_export.csv -db aklatan.db

% echo 'select count(title) from books;' | sqlite3 aklatan.db

600

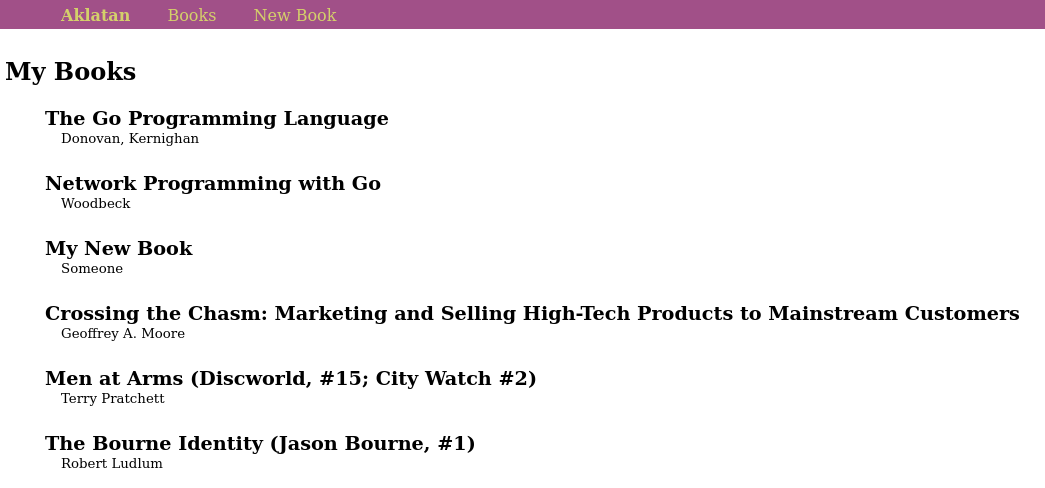

Excellent. Now our database is populated with 600 books. We can run aklatan and see these in the book list page.

A screenshot of aklatan after importing books from a CSV file.

A Potential Problem to Solve Next Week

With 600 books in the database I see log output like this when loading the book list:

[GIN] 2022/04/19 - 19:50:41 | 200 | 10.89955ms | 127.0.0.1 | GET "/books/"

[GIN] 2022/04/19 - 19:50:41 | 200 | 37.156µs | 127.0.0.1 | GET "/static/css/styles.css"

Ten milliseconds isn’t the end of the world, but it’s worth noting that when there are only 3 books in the database, the time is more like 600 microseconds. With complex records and much bigger database that may become an actual problem. Also, even though 10ms isn’t huge, we want to shave down as much as we can to avoid creating user-perceptible delays – because in a real app, both the external network and possibly other components in our deployment may add latency.

The strategy we’ll use to bring that time down by a factor of about 10 will be pagination, which we’ll explore next week.